Sequence level training with recurrent neural networks

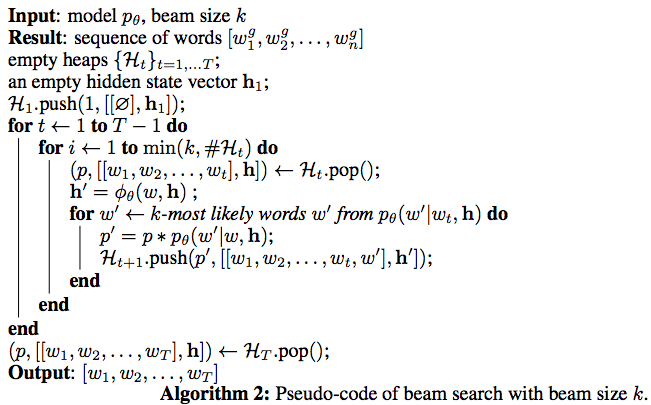

0 Beam Search Pseudo-Code

1 Introduction

In the previous seq2seq approach, the model is trained to predict the next word given the previous ground truth words as input. And at the test time , the resulting model is used to generate the entire sequence by predicting one word at a time and feeding the generated word back as the input at the next time step.

This process is problematic because firstly the model is trained on a different distribution of inputs, namely , words drawn from the data distribution as opposed to words drawn from the model distribution. Secondly, the loss function used to train these model is at the word level. A popular choice is

So this paper solved the two problems by using the model prediction at training stage and directly optimize some metrics on the sequence level.

2 Model

2.1 Data As Demonstrator

2.2 E2E

At time step t+1, we take the k largest scoring previous words as input whose contribution is weighted by their scores

2.3 Sequence level training

Using the reinforcement learning.

In practice, they approximate the expectation with a single sample from the distribution of actions.

Generative Adversarial Text to Image Synthesis

1 Introduction

This work proposes a method to translate text into image pixels. One thorny remaining issue not solved by deep learning alone is that the distribution of images conditioned on a text is highly multimodal, in the sense that there are very many plausible configurations of pixels that correctly illustrate the description. This work develops a simple and effective GAN architecture and training strategy that enables compelling text to image synthesis of bird and flower images.

2 Model

The approach is to train a deep convolutional generative adversarial network(DC-GAN) conditioned on text features. Both the generator network G and the discriminator network D perform feed-forward inference conditioned on the text feature.

2.1 Network

In generator G, we sample a noise z and get the text feature compressed by a fully-connected layer to a smaller dimension, and concatenate them together and feed into the network.

In discriminator D, we perform several layers of stride-2 convolution with spatial batch normalization followed by leaky-

2.2 Match-aware discriminator (GAN-CLS)

Once G has learned to generate plausible images, it must also learn to align them with the conditioning information, and likewise, D must learn to evaluate whether samples from G meet this conditioning constraint.The discriminator observes two kinds of error. The first is the unrealistic image(for any text), and realistic images of wrong class that mismatches the conditioning information.

2.3 Learning with manifold interpolation(GAN-INT)

The amount of text data is a limiting factor for image generation performance. And it has been observed that deep neural network tends to learn high-level representations in which interpolation between training data embeddings are also on or near the data manifold.

Motivated by this property, we can generate a large amount of additional text embedding by simply interpolating between embeddings of training set captions.

This can be viewed as adding an additional term to the generator objective to minimize(5).

2.4 Inverting the generator for style transfer

If the text encoding f(t) captures the image content, then in order to generate a realistic image the noise sample z should capture style factors such as background color and pose. With a trained GAN, one may wish to transfer the style of a query image onto the content of a particular text description. To achieve this, one can train a convolutional network to invert G to regress from sample x' <- G(z, f(t)) back onto z. We use a simple squared loss to train the style encoder:

where S is the style encoder network. With a trained generator and style encoder , style transfer from a query image x onto text t proceeds as follows: where x' is the resulting image and s is the predicted style.

A neural algorithm of artistic style

1 Introduction

This paper proposes an algorithm to divide a picture into style and content. This can be used in picture synthesis. For example, given a picture of scenery called A, and a picture of any famous artwork B, we can recombine A and B into one pictures that both capture the content of the A and get gist of B's style. The example is as below.

This can be done using the Neural network method that is prevalent nowadays. Concretely, in the context of neural network , the image can be represented using a real-valued vector which is computed using some kind of deep neural networks. In this paper, they use pre-trained VGG model of convolutional network.

2 Model

Since the generated picture both capture the style and content of 2 pictures, we define an objective function that has the loss term of content with respect to one picture and the loss term of style with respect to another picture.

x is the input picture that we want to generated , and is initilzed as white noise image.

p is the picture of which x has to satisfy the content constraint.

a is the picture of which x has to meet the style constraint.

Since there are coefficients of both content loss term and style loss term. We can control the generated image to whether have more fidelity on content or on style by adjust the coefficient.

In the model all the parameter will be fixed (including the pretrained VGG model) except the input image x. We use back-propragation that carry the error from objective function to the input image x. That is , the exact pixel value of x will be modified according to the error back propagated from the model so that finally the x will both satify the content constraint of p and style constraint of a.

For content loss,

F(l,i,j) means the l-th layer of VGG net. And i, j means the j-th neuron of the i-th filter in l-th layer. This means that we reshape the matrix in l-th layer [C,H,W] to [C,H*W]. This loss term can also be considered as putting a pixel-wise constraint on the generated picture.

For the style loss,

We measure the style variable of one layer by letting the all filters multiply with each other.The factor wl is equal to one divided by the number of active layers with a non-zero loss-weigth wl.

Generating Images with Recurrent adversarial network

1 Introduction

This work integrates the GAN and sequential generation into the model. By taking the normal sampling noise as input into the GAN at time T, the GAN generates the current part and write it on the canvas. All parts along the time axis on the canvas accumulated and form the final image. Unrolling the gradient descent-based optimization that generates the target image yields a recurrent computation, in which an encoder convolutional network extracts images of the current canvas.The resulting code and the code for the reference image get fed into a decoder which decides on an update to the canvas.

2 About GAN

The game is between a generative and discriminative model G and D, respectively, such that the generative model generates samples that are hard for the discriminator D to distinguish from real data, and the discriminator tries to avoid getting fooled by the generative model G.

The objective function is :

Since the term 1-D(G(z)) get saturated, which makes insufficient gradient flow through the generative model G, as the magnitude of gradients get smaller and prevent them from learning, we remedy the objective function as (2)

and learning them separately. We update the parameter following the rules below.

3 Model

We propose sequential modeling using GAN.The obvious advantage of sequential modeling is that repeatedly generating outputs conditioned on previous states simplifies the problem of modeling complicated data distributions by mapping them to a sequence of simpler problems.

GRAN: generative recurrent adversarial networks.

The generator G consists of a recurrent feedback loop that takes a sequence of noise samples drawn from the prior distribution

At each time step t, a sample z from the prior distribution is passed onto a function f(.) with hidden state h(c,t) where h(c,t) represents the current encoded status of the previous drawing C(t-1), C(t) is what is drawn on canvas at time t and it contains the output of the function f(.). Moreover the h(c,t) is encoded by the function g(.) from previous drawing C(t-1). The function g(,) can be seen as a way of mimic the inverse of the function f(.) In this work we use

The influence on generated image by noise vector.

We sample a noise vector from p(z) and use the same noise for every time step.

During the experiment, it is more time consuming to find a set of

On the other hand, adding different noise increase the model capability on generating

Deep Generative Image Models using a Laplacian Pyramid of Adversarial Netowrk

1 Introduction

This work uses a cascade of convolution network (Convet) under the laplacian pyramid framework to generate images in a coarse-to-fine fashion. At each level of the pyramid, an independent Convnet is trained using Generative Adversarial Network, which can capture image structures at a particular scale. Instead of generating an image at one time, they do it in a sequence style. At the beginning, a random vector is sampled as input to a Convet, output an image at its level. The second stage samples the structure of image at the current level, conditioned on the image generated before. Subsequent levels continue this process , always conditioning on the output from the previous scale until the final level is reached.

2 Approach

2.1 Generative Adversarial Network

The method contains two networks against one another: a generative model G that captures the data distribution and a discriminative model D that distinguishes between samples drawn from G and samples of the image data. G takes a noise vector drawn from a distribution Pnoise(Z) and output an image h'. D takes an image as input stochastically chose to be either h' , as generated by G , or h, a real image drawn from the training data. Pdata(h). D outputs a scalar probability ,which is trained to be high if the input was real and low if generated from G.

2.2 Laplacian pyramid

d(.) be a downsampling operation.

Image I with shape of (W,H), d(I) with shape of (W/2,H/2)

u(.) be a upsampling operation.

Image I with shape of (W/2,H/2), u(I) with shape of (W,H)

The coefficient hk at each level k of the laplacian pyramid are constructed by taking the difference between adjacent levels in the Gaussian pyramid.

2.3 Laplacian Generative Adversarial Network

We have a set of generative Convet models {G0,G1,......Gk}, each of which captures the distribution of coefficient hk for natural images at a different level of the laplacian pyramid.

Note that models at all levels except the final are conditional generative models that take an upsampled version of the current image I'k+1 as a conditioning variable, in addition to the noise vector Zk.

The generative models {G0,G1,......., Gk} are trained using the Conditional GAN approach at each level of the pyramid. We construct a laplacian pyramid from each training image I. At each level we make a stochastic decision to either (i) construct the coefficients hk using the Equation(3), or generate them using Gk.

Gk takes as input the image Lk = u(Lk+1), as well as noise vector Zk. Dk takes as input hk or h'k along with the low-pass image

Paper Reading: Image Captioning with Semantic Attention

1 Introduction

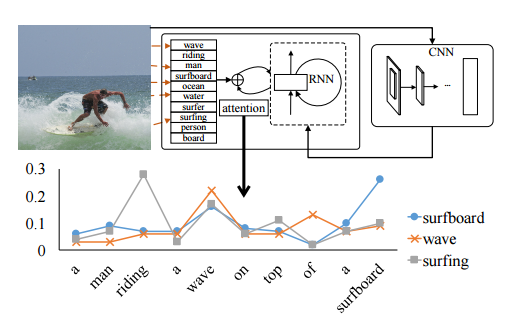

The contribution of this paper is to propose an attention mechanism which is integrated in a RNN framework, over the visual attributes of the given image and use the attention over visual attributes as well as the information of the last time stamp, to generate information of the current time stamp. The author regards this proposal as a combination of the existing top-down approach , that is ,extracting features from image as a gist (a real-valued vector)and using it to generate caption by rnn, and bottom-up approach, that is ,extracting several discrete visual attributes from the image and build the sentence on these visual attributes.

2 Model

2.1 Overall framework

They first extract a feature of the whole image , served as a quick overview of the image content and also a set of attribute detectors each of which corresponds to an entry in the vocabulary,denoted as Ai.

2.2 The input attention model

w models the relative importance of visual atrributes in each dimension of the word space.

2.3 Ouput attention model

V is the bilinear parameter matrix, activation function is used to ensure the same nolinear transoform is applied to the two feature vectors before they are computed.

2.4 Model learning

p>1 penalize execssive attention of a specific attribute accumulated over the entire sentence, while q<1 penalize diverted attention to multiple attributes at any particular time.

2.5 Visual attribute prediction

They build a set of fixed visual attributes by selecting the most common words from the captions in the training data. The resulting attributes are treated as a set of predefined categories and be learned by as a conventional classification problem.

DenseCap: Fully Convolutional Localisation Networks for Dense Captioning

1 Introduction

This paper addresses the object localization and image caption jointly by proposing a fully convolutional localization network. (FCLN). The architecture is composed of a Convnet, a novel dense localization layer, and a RNN language model that generates label sequences. The goal is to design an architecture that joinly localizes regions of interest and then describes each with natural language.

2 Model Architecture

2.1 Convolutional Network.

VGG-16 architecture. It consists of 13 layers of 3*3 convolutions interspersed with 5 layers of 2*2 max pooling. We remove the final pooling layer so an input image of shape 3*W*H gives rise to a tensor of features of shape C*H'*W'. where C = 512, H' = H/16, W' = W/16.

2.2 Fully Convolutional Localization Layer

The layer receives an input tensor of activations, identifies spatial region of interest, and smoothly extracts a fixed-size representation from each region.

A) Inputs/Outputs:

The localization layer accepts a tensor of activations of size C*W'*H' and selects B regions of interest and returns three output tensors giving information about reach region.

1 Region Coordinates. a matrix of B*4

2 Region Scores: A vector of B

3 Region Features: A tensor of shape B*C*X*Y giving features for each region, which is represented by and X*Y grid of C channels.

B)Convolutional Anchors:

We project each point in W'*H' grid of input features back into the W*H iamge plane. and consider k anchors boxes of different aspect ratios centered at this projected point.

More specifically , we produce 5k*W'*H', each grid 5*k numbers containing 4 coordinate of region in W*H and a confident score for each anchors.

C)Box Regression

D)Box Sampling : we do not consider all region proposals, just a part of them.

E) Bilinear Interpolation:

Extract a fixed-size feature representation for each variably sized region proposal.

Given an input feature map U of shape C*W'*H' and a region proposal, we interpolate the features of U to produce an output feature map V of shape C*X*Y. After projecting the region proposal onto U we compute a sampling grid G of shape X*Y*2 associating each element of V with real-valued coordinates into U.

2.3 Recogination Network

a fully-connected neural network that processes region features from localization layer.

2.4 RNN language Model

use region code only at time stamp 1.