Spatial Transformer Networks

1 Introduction

A desirable property of a system which is able to reason about images is to disentangle object pose and part deformation from texture and shape.

In order to overcome the drawback that CNN's lack of ability to be spatially invariant to the input data in a computationally and parameter efficient manner, the author proposes a new learnable module, spatial transformer, which explicitly allows the spatial manipulation of data and is differentiable. It can be plugged into a standard Neural Network to provide spatially tranformation abilities.

今現在、空間的不変性を保てるCNNはまだ少ないです。でもそれは物体を認識するには非常に役たつ性質であります。犬の画像を部分的に抽出したり、平行移動したり、捻じ曲げたりしても犬であることに変わりがないはずです。それをCNNが正しく認識させるためにspatial network を導入します。画像をどう変更するかは入力依存であり、全体のコストを出来るだけ小さくするように変更のパラメーターも自動的に学習される。

2 Spatial Transfers

The transformation is conditioned on the input feature, producing a single output feature map.There are three parts, a localization network takes the input feature map, and through a number of hidden layers outputs the parameters of the spatial transformation that should be applied to the feature map. Then the predicted paramters are used to create a sampling grid, which is a set of points where the input map should be sampled to produce the transformed output. This is done through a generator. At last, the feature map and the sampling grid are taken as inputs to the sampler, producing the output map .

2.1 Localization Network

Floc() can take any form but should include a final regression layer to produce the transoformation parameters.

3.2 Parameterised Sampling Grid

To perform a warping of the input feature map, each output pixel is computed by applying a sampling kernal centered at a particular location in the input feature map.

There are many kinds of transformations. For affine transformation teh formula is as below:

(xs, ys)are source coordinates, (xt,yt) are target coordinates.

The transformation defined above allows cropping, translation, rotation, scale and skew to be applied to the input feature map, and require only 6 parameters.

3.3 Differentiable Image Sampling

The sampler takes the set of sampling points  along with the input feature map U and produce the sampled output feature map V.

along with the input feature map U and produce the sampled output feature map V.

3.4 Some take-aways

Placing spatial transformers within a CNN allows the network to learn how to actively transform the feature maps to help minimize the overall cost function of the network during traing.

It is also possible to use spatial transformers to downsample a feature map.

Finally, it is possible to have multiple spatial transformers in a CNN. Placing multiple spatial transformers at increasing depths of a network allow transformations of increasingly abstract representations. One can also use multiple spatial transformations in parallel -- this can be usefull if there are multiple objects or parts of interest in a feature map that should be focus on individually.

Generative Images From Captions With Attention

1 Introduction

This work propose a sequential deep learning model to generate images from captions, the model draws a patch on a canvas in a time series and attend to relevant word at each time.

2 Related work

Deep discriminative Model VS Deep Generative Model

The previous generative models focus on Boltzman Machine and Deep Belief Network, whose drawback is that each of them requires a computationally costly step of MCMC to approximate derivates of an intractable partition function, making it difficult to scale them to large datasets.

Variational Auto-Encoder can be seen as a neural network with continuous latent variables. The encoder is used to approximate the posterior distribution and the decoder is used to stochastically reconstruct the data from latent variables.

Generative Adersarial Network use noise-contrastive estimation to avoid calculating an intractable partition function. The model consists of a generator that generates samples using a uniform distribution and a discriminator that discriminates between real image and generated images.

3 Model

The captions are reprensented as a sequence of consecutive words and images are represented as a sequence of patches drawn on a canvas ct over time t=1,2,....,T. The model can be viewed as a part of the sequence-to-sequence framework.

説明文をイメージ両方を時系列として捉えてシーケンスツーシーケンスのフレームワークを適用できる

3.1 Language Model

Caption is represented as a sequene of 1-of-K encoded words.

And use bi-LSTM to model it.

3.2 Image Model

The conditional DRAW network is a stochastic recurrent neural network that consists of a sequence of latent variables Zt, where the output is accumulated over all T time steps.

The mean and variance of the prior distribution over Zt is:

The align function is used to compute the alignment between the input caption and intermediate image generative steps.

The formula is as follows:

The output of LSTM(gen) is passed to the write operator which is added to a cumulative canvas matrix.

Finally, each entry Ct,i from the final canvas matrix Ct is tranformed using a sigmoid function to produce a conditional Bernoulli distribution.

3.3 Learning

The model is trained to maximize a variational lower bound L on the marginal likelihood of the correct image x given the input caption y:

4 The evaluation

The main goal of this work is to learn a model that can understand the semantic meaning expressed in the textual descriptions of images, such as the properties of objects, the relationships between them, and then use the knowledge to generate relevant images. So the authers change some words in the caption and see whether the model made relevant changes in the generated samples.

DRAW: A Recurrent Neural Network For Image Generation

1 Introduction

We draw pictures not at once, but in a sequencial, iterative fashion.This work proposes an architecture to create a scene in a time series, and refine the sketches successively.

The core of DRAW is a pair of recurrrent neural networks: an encoder that compresses the real images and a decoder that reconstitutes images after receiving codes. The loss function is a variational upper bound on the log-likelihood of the data.

It generates images step by step , selectively attending to parts of images while ignoring others.

The DRAW architecture is similar to other variational auto-encoders: an encoder network determines a distribution over latent codes that capture salient information about the input data; a decoder network receives samples from the code distribution and uses them to condition its own distribution over images.

2 The DRAW Network

2.1 Network Architecture

Q(Zt|h(t,enc)) is a diagnonal Gaussian

2.2 Loss Function

The final canvas matrix Ct is used to parameterise a model D(X|Ct). D is a Bernoulli distribution.

reconstruction loss

reconstruction loss

latent loss

latent loss

2.3 Stochastic data generation

2.4 Read and Write Operation

N*N grid of Gaussian Filters is positioned on the image by specifying the co-ordinates of the grid center and the stride distance between adjacent filters.

(i,j)is a point in the attention patch, (a,b) is a point in the input image.

イメージの各点がどれぐらいフィルターの一つの点Aに貢献するかを定量的に表現する重み行列。

Show, Attend, and Tell: Neural Image Caption Generation with visual Attention

各种带隐藏变量的机器学习模型学会了吗? 神经网络,RBM,各种概率图

EM算法,变分推演,平均场等等套隐藏变量的求解方法

以上算法背后的凸优化方法

推公式

1 Introduction

In the past, to solve image caption task, one always extracts features from image using the fully-connected layer vector which is called CNN-code. But rather than compress an entire image into a static representation, attention allows for salient features to dynamically come to the forefront as needed. They introduce two attention-based image caption generators under a common framework, (a) a soft deterministic attention mechanism trainable by standard back-propagation methods (b) a hard stochastic attention mechanism trainable by maximizing an approximate variational lower bound or equivalently by reinforce.

2 Image Caption Generation with Attention Mechanism

2.1 Encoder: convolutional features

The model takes raw image as input and generates a caption y encoded as 1-of-K vectors.

We extract L features from CNN each of which is a D-dimensional vector corresponding to a part of image.

2.2 Decoder: LSTM

The LSTM generates one word at each time stamp conditioned on the hidden state, previous generated word and a context vector(image attention).

z is the context vector, capturing the visual information associated with a particular input location and is a dynamic representation of the relevant part of image as the time passes. More specifically, z is computed by L features, for each feature, we assign a coefficient to it representing the relative importance to the current context vector.

We use deep output layer to compute the output word probability given the LSTM state, the context vector and the previous word.

n is the dimension of LSTM hidden state. m is the embedding dimension.

2.3 Stochastic Hard Attention

s(t,i) is an indicator one-hot variable which is set to 1 if the ith location(out of L) is the one used to extract visual features.

The objective function Ls to be optimized.

(11) is a Monte Carlo based sampling approximation of the gradient with respect to the model parameters. This can be done by sampling the location st from a multinoulli distribution.

In making a hard choice every point , Equation (6) is a function that returns a sampled ai at every point in the time based upon a mutlinoulli distribution parameterized by alpha.

2.4 Deterministic soft Attention

2.5 Traing Procedure

Optimization method: RMSProp and Adam.

How to get ai? Use VGGnet the fourth Convnet Layer before max-pooling 14*14*512.

This means the decoder operates on the flattened 196*512 (L*D)

Unifying Visual-semantic Embedding with Multimodal Neural Language Models

1 Introduction

This work use the framework of encoder-decode models to solve the problem of image-caption generation. For encoder, the model learn the joint sentence-image embedding where sentence embeddings are encoded using LSTM, and image embedding are encoded using CNN. A pairwise ranking loss is minimized in order to learn to rank images and their descriptions. For decoder, the structured-content neural lnaguage model generates sentence conditioned on distributed representations produced by the encoder.

2 Model Description

2.1 LSTM for modeling sentences

2.2 Multimodal distributed representations

K, D, V the dimension of embedding space, image vector, vocabulary size.

Wi : K*D , Wt: K*V

For a sentence-image pair, v is the last hidden state of LSTM representing sentence,

x = Wi*q, q is CNN code.

We optimize the pair-wise ranking loss.

2.3 Log-bilinear neural language models

We have first n-1 k-dimension words (w1,w2 ... wn-1) as context, now we want to predict the nth word, Ci is the context parameter matrices.

(Every word has input vector wi and output vectors ri.)

2.4 Multiplicative neural language models

Suppose now we have a vector u from multimodal space, associated with a word sequence. u may be an embedded vector of an image. A multiplicative neural language model models the distribution P(wn=i|w1:n-1,u).

G = K,

G = K,

2.5 Structure-content neural language models

Suppose we are given s sequence of word-specific structure variables T{t1,t2...., tn}, along with a description S{w1, w2 .... ,wn}.Ti may be part-of-speech.

So we model the distribution from the previous word context w1:n-1 and forward structure context tn:n+k, where k is the context size.

If the condition vector u be the description sentence computed with LSTM, we could use a large amount of monolingual text to improve the quality of language model.

Deep Visual-Semantic Alignments for Generating Image Description

1 Introduction

This paper uses CNN to learn image regions embedding, bidirectional RNN to learn sentence embeddings, associate them into a common multimodal space, and a structured objective to align two modalities. Then it proposes a mutlimodal RNN to learn to generate description of image regions using the aligned image-sentence pair.

2 Model Description

2.1 Learn to Align visual data and language data

First use RCNN to extract 19 regions from the image, use pre-trained CNN to express each regions with real-value vector.

Wm: h*4096

Wm: h*4096

2.2 Represent Sentence

Use Bidirectional Recurrent Neural Network.

use word2vec to initilize the word vector and keep fixed during training.

2.3 Alignment objective

The objective encourages aligned image-sentence pairs to have a higher score than misaligned pairs by a margin.

gk is a set of image-regions, gl is a set of sentence fragments.

Then Skl measures how well sentence l and image k are aligned.

2.4 Decoding text segment alignments to images

why not Vt*Sj?

why not Vt*Sj?

(N words. M image regions , aj [1...M ])

The aim is to align continuous text sequences of words to a single bounding box.

We minimize the energy to find the best alignments using dynamics. The output is a set of image regions annotated with segments of text.

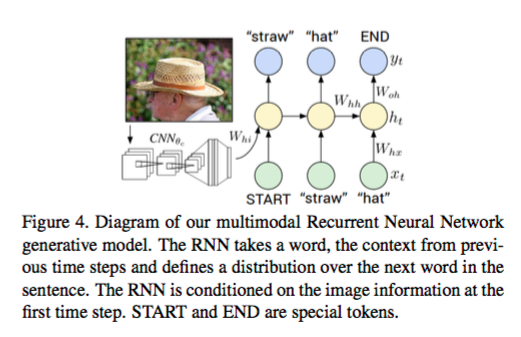

2.5 Multimodal Recurrent Neural Network for generating descriptions

3 Limitation.

Model can only generate a description of one input array of pixels at a fixed resolution.

A more sensible approach might be to use their mutual interactions and wider context before generating a description.

And the approach consists of two seperate models. Going directly from an image-sentence dataset to region-level annotations as part of a single model trained end-to-end may be more desirable.

Deep Compositional Captioning: Describe Novel Object Categories without Paired Training Data

1 Inroduction

In the past, the image caption model can only be trained on paired image-sentence corpora. To address this limitation, the author proposed a Deep Compositional Captioner that can generate descriptions about objects which don't appear in paired corpora ,but are present in unpaired image data(object recognition datasets) and unpaired text data

First train a lexical classifier and a language model, then combine them into a deep caption model which is trained on paired dataset. Second, the multimodal layer where knowledge from known objects can be transferred to new objects only seen in unpaired dataset.

There are two main approaches for caption.

(1)RNN-CNN framework: high-level features are extracted from image using trained CNN for image classification task.(VGGNet), then a recurrent network learn to predict sentences conditioned on image features and previously predicted works.

(2)Construct a multimodal space: recurrent language features and image features are embedded in a multimodal space. The multi modal is then used to predict the caption word by word.

Zero-shot Learning: images are mapped to semantic word vectors corresponding to their classes, and the resulting image embeddings are used to detect and distinguish between between unseen and seen classes.

The idea is to transfer information from weights which are trained on image-sentence data to weights which are only trained on text data.

2 Deep Compositional Captioner

2.1 Deep Lexical Classifier

A CNN which maps images to semantic concepts. Mine some concepts in paired image-text data , some adjectives, verbs, and nouns. The lexical classifier is trained by fine-tuning a CNN which is pretrained. The output is fi, where each index of fi corresponds to the probability that a particular concept is present in the image.So actually multiple labels are tagged to each image.

2.2 Language Model

LSTM

2.3 Caption Model

Both language model and caption model are trained to predict a sequence of words, whereas the lexical classifier is trained to predict a fixed set of candidate visual elements for a given image.

2.4 Transfer Learning

(1)Direct transfer:

For exmaple, the word "sheep" is in the paired dataset, and "alpaca" is not.

Now we need to calculate fiWi[:, ca] + flWl[:,ca] + b[ca], to transfer the knowledge that model captured from sheep to alpaca(a kind of sheep),

Wi[:,ca], Wl[;ca], b[ca] = Wi[:,cs], Wl[;cs], b[cs]

Wi[ra,ca] = Wi[rs,ca]

Wi[rs,ca] = Wi[ra,cs] = 0

(2) Delta Transfer :

We use word2vec to determine the word similarity.