Sequence level training with recurrent neural networks

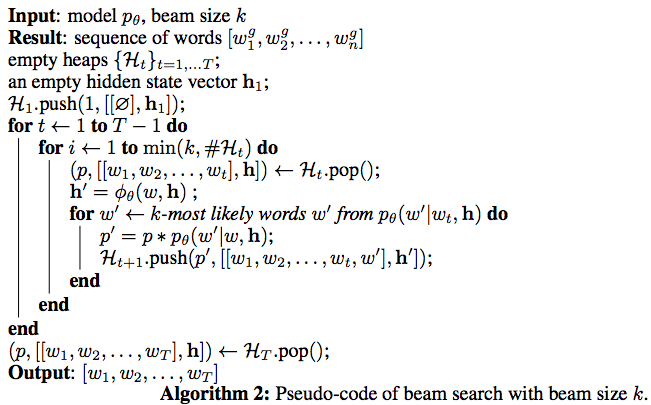

0 Beam Search Pseudo-Code

1 Introduction

In the previous seq2seq approach, the model is trained to predict the next word given the previous ground truth words as input. And at the test time , the resulting model is used to generate the entire sequence by predicting one word at a time and feeding the generated word back as the input at the next time step.

This process is problematic because firstly the model is trained on a different distribution of inputs, namely , words drawn from the data distribution as opposed to words drawn from the model distribution. Secondly, the loss function used to train these model is at the word level. A popular choice is

So this paper solved the two problems by using the model prediction at training stage and directly optimize some metrics on the sequence level.

2 Model

2.1 Data As Demonstrator

2.2 E2E

At time step t+1, we take the k largest scoring previous words as input whose contribution is weighted by their scores

2.3 Sequence level training

Using the reinforcement learning.

In practice, they approximate the expectation with a single sample from the distribution of actions.