Deep Visual-Semantic Alignments for Generating Image Description

1 Introduction

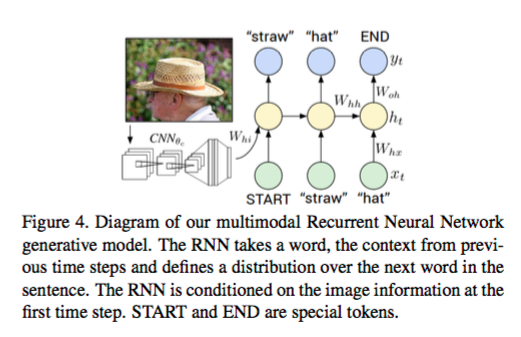

This paper uses CNN to learn image regions embedding, bidirectional RNN to learn sentence embeddings, associate them into a common multimodal space, and a structured objective to align two modalities. Then it proposes a mutlimodal RNN to learn to generate description of image regions using the aligned image-sentence pair.

2 Model Description

2.1 Learn to Align visual data and language data

First use RCNN to extract 19 regions from the image, use pre-trained CNN to express each regions with real-value vector.

Wm: h*4096

Wm: h*4096

2.2 Represent Sentence

Use Bidirectional Recurrent Neural Network.

use word2vec to initilize the word vector and keep fixed during training.

2.3 Alignment objective

The objective encourages aligned image-sentence pairs to have a higher score than misaligned pairs by a margin.

gk is a set of image-regions, gl is a set of sentence fragments.

Then Skl measures how well sentence l and image k are aligned.

2.4 Decoding text segment alignments to images

why not Vt*Sj?

why not Vt*Sj?

(N words. M image regions , aj [1...M ])

The aim is to align continuous text sequences of words to a single bounding box.

We minimize the energy to find the best alignments using dynamics. The output is a set of image regions annotated with segments of text.

2.5 Multimodal Recurrent Neural Network for generating descriptions

3 Limitation.

Model can only generate a description of one input array of pixels at a fixed resolution.

A more sensible approach might be to use their mutual interactions and wider context before generating a description.

And the approach consists of two seperate models. Going directly from an image-sentence dataset to region-level annotations as part of a single model trained end-to-end may be more desirable.